Related Blogs

Key Takeaways

- Kubernetes or K8s is a container orchestration tool used to scale the container systems while docker is used to build, test, and deploy apps faster than the traditional methods.

- Kubernetes allows you to define complex and containerized apps and run them at scale across cluster of servers while Docker enables you to standardize application operations and deliver the code faster.

- Kubernetes acts as orchestrator for the docker containers when we use both the tools together. K8s can also automate the scaling, and deployment operations.

- Kubernetes and Docker both together are more powerful and flexible for complex and large containerized applications.

Note: Kubernetes and Docker can’t be compared directly because these two serve different purposes in the containerization and orchestration landscape. You’ll get a deeper understanding about these two after reading the following blog post.

Kubernetes and Docker are the two open-source platforms that stand out as market leaders in the container arena. Both the solutions can help the software development companies in a unique way in container management. The question is, which one should you choose? Kubernetes or Docker? It is a common way that the debate over which technology to employ is presented. In this post, we will explore the answer to the Kubernetes vs Docker debate and look at their significant advantages when in use.

docker’s main function is to create images and containers.

— Francesco (@FrancescoCiull4) May 24, 2021

Kubernetes’ main function is to orchestrate containers.

One very confusing question I get sometimes is:

“Shall I just use Kubernetes now ??”

This is a wrong question because they do different things.

…

1. What is Docker?

Docker, a commercialized containerization platform and a container runtime, is used to create, launch, and execute containers. It uses a client server architecture and employs a single API for both automation and basic instructions. Nothing about this should come as a surprise, as it was essential in popularizing the idea of containers, which in turn sparked the development of tools like Kubernetes.

Docker is a tool that makes it easier to create, deploy, and run applications using containers. Containers allow a developer to package up an application with all the parts it needs, such as libraries and other dependencies, and ship it all out as one package.

— Simon (@simonholdorf) January 7, 2023

Docker also has a toolkit that is often used to bundle apps into immutable container images. This is done by making a Dockerfile and then running the commands needed to make the container image using the Docker server.

While developers are not required to use Docker to work with containers, it does make the process simpler. These container images may then be used on any container-friendly platform.

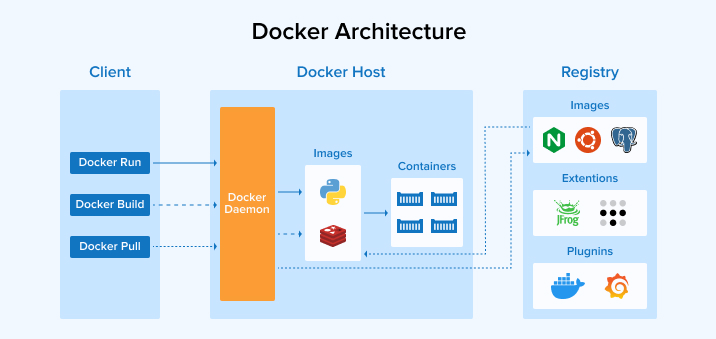

Let’s take a look at basic Docker architecture:

1.1 Docker Swarm

An alternate option for Kubernetes, Docker Swarm is a container orchestration solution from Docker. It is native and open-source.

Docker Swarm offers all the features you need for mass container deployment and administration such as automatic load balancing, multi-host networking, scaling, and more. So, that you do not have to depend on a third-party orchestration tool anymore.

The tool is lightweight and the installation process is pretty simple. And if you have any experience using the Docker ecosystem, then it’s easy to integrate as well.

If you are working with a few nodes or a simple application, then Docker Swarm proves to be an ideal option. But if you are required to orchestrate large nodes for a complex application then you are better off using Kubernetes’ monitoring and security features. It offers great flexibility and resiliency.

1.2 Features of Docker

Open-Source Platform: The freedom to pick and choose among available technologies is a key feature of an open-source platform. The Docker engine might be helpful for solo engineers that need a minimal, clutter-free testing environment.

Accelerated and Effective Life Cycle Development: Docker’s main goal is to speed up the software development process by eliminating repetitive, and mundane configuration tasks. The objective is to have your apps be lightweight, easy to build, and simple to work on.

Isolation and Safety: Docker’s built-in Security Management functionality stores sensitive data within the cluster itself. Docker containers offer a high degree of isolation across programs, keeping them from influencing or impacting one another.

Simple Application Delivery: The ability to quickly and easily set up the system is among Docker’s most important characteristics. Due to this feature, entering codes is simple and quick.

Reduction in Size: Docker provides a lot of flexibility for cutting down on app size during development. The idea is that by using containers, the operating system footprint may be minimized.

1.3 Key Benefits of Using Docker

Following are the key advantages of using Docker:

- Send and receive container requests over a proxy.

- Manage the entire container lifecycle.

- Keep tabs on container activities.

- It does not provide containers unrestricted access to all of your resources.

- For packaging, running, and creating container images, docker build and docker compose are used.

- Data may be pushed to and pulled from image registries.

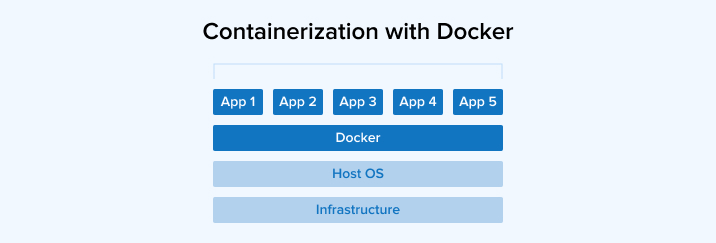

1.4 Rise of the Containerization with Docker

Limiting ecosystems is not new, and Docker isn’t the only containerization platform out there, but it has become the de facto standard in recent years. One of Docker’s key components is the Docker Engine, a virtual machine. Any development computer may be used to create and execute containers, and a container registry, such as Docker Hub or Azure Container Registry, can be used to store or distribute container images.

Handling them becomes more complicated when applications expand to use several containers hosted on different hosts. Docker is an open standard for delivering software in containers, but the associated complexity may quickly mount up.

When dealing with a large number of containers, how can you ensure that they are all scheduled and delivered at the right times? How does communication occur between the various containers in your app? Is there a method for efficiently increasing the number of running container instances? Let’s see if Kubernetes can answer these scenarios!

2. How Does Docker Work?

Docker comes with a client-server architecture. The client interacts with the Docker Daemon that takes care of everything including creating, running, and distributing the Docker containers. You can either connect the Docker client with a remote Docker Daemon or run them both on the same system.

3. What is Kubernetes?

When it comes to deploying and managing containers or containerized applications, Kubernetes is one of the best open-source solutions. K8s(Kubernetes) tools, services, and support are widely available. According to the CNCF (Cloud native computing foundation), 96% of the organizations are either using or evaluating K8s.

In the Kubernetes cluster, a special container acts as the control plane, allocating tasks to the multiple containers (the worker nodes). The master node is responsible for deciding where apps will be hosted and how they will be organized and orchestrated.

Kubernetes simplifies the process of locating and managing the many components of an application by clustering them together. This makes it possible to manage large numbers of containers over their entire lifecycles. K8s has a Container Runtime Interface (CRI) plugin that enables the use of a wide variety of container runtimes. There is no need to recompile the cluster components while using CRI.

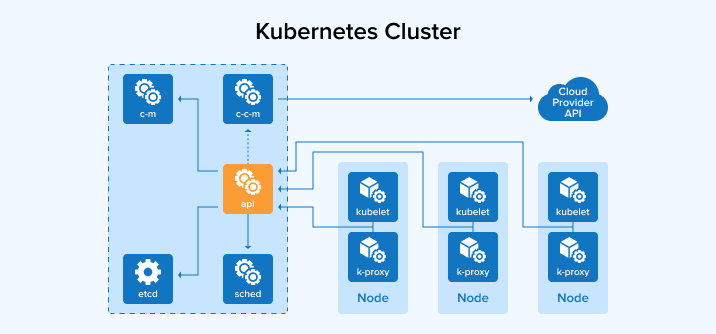

3.1 Kubernetes Architecture

There are three main components to know about in the Kubernetes architecture.

- Control Plane: It handles the overall state of the system where it can configure the Kubernetes API Server, as well as schedule and scale applications.

- Nodes: The compute resources required to deploy an app come from the nodes. They are the worker machines and they run containers. The nodes can either be physical machines or virtual machines that run on the cloud.

- Pods: the smallest deployable units in the Kubernetes are called Pods. They hold one or more containers with the same storage volumes and network namespace. Pods are ephemeral by design. Based on the app requirements, they are easy to replace and easy to scale.

Let’s take a look at the Kubernetes cluster.

3.2 Features of Kubernetes

Bin Packing Automation: With Kubernetes, the app can be prepared automatically, and the containers can be scheduled according to their needs and the resources available, without compromising on availability. Kubernetes strikes an equilibrium between essential and best-effort activities to guarantee full resource usage and reduce cost.

Orchestration of Data Storage: Kubernetes is equipped with a number of storage management options out of the box. This function enables the automated installation of any storage solution, whether it be local storage, a public cloud provider like Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), or any other network storage system.

Load Balancing and Service Discovery: Kubernetes takes care of networking and communicating for you by dynamically assigning IP addresses to containers and creating a single DNS domain for a group of containers to load-balance information within the cluster.

Manage Confidential Information and Settings: Kubernetes allows you to alter the application’s settings and roll out new secrets without having to recompile your image or risk exposing sensitive information in your stack’s settings.

Self-Healing: Kubernetes’s functionality plays the role of a superhero. Any failed containers are automatically rebooted. If a node fails, the containers it holds are reallocated to other nodes. If containers fail to react to Kubernetes’s user-defined check ups, access is restricted until the containers are operational.

3.3 Key Benefits of Using Kubernetes

Following are some key benefits of using Kubernetes:

- Container deployment on a cluster of computers is planned and executed automatically.

- It uses load balancing and makes a container accessible online.

The Kubernetes API makes it easy to do client-side load balancing. The Endpoints Load Balancer is a great example. https://t.co/jPL4qz7sMQ pic.twitter.com/oJxTbk7Wzx

— Kelsey Hightower (@kelseyhightower) November 6, 2016

- New containers are started up mechanically to deal with large loads.

- Self-repair is a feature of this item.

- It updates applications and checks for problems in their functioning.

- The Storage orchestration is fully automated.

- It allows for dynamic volume provisioning.

3.4 Container Orchestration with Kubernetes

With Kubernetes, you can manage a group of virtual machines and make them execute containers depending on the resources each container needs. Kubernetes’ primary functional unit is the pod, which is a collection of containers. You can control the lifetime of these containers and pods to maintain your apps online at any size.

4. How does Kubernetes Work:

Kubernetes cluster is made from the nodes running on containerized apps. At least one work node is present in each cluster and they host the pods. Meanwhile, the control plane handles the pods and worker nodes in the cluster.

5. Kubernetes vs Docker: Key Comparison

In the following tabular comparison, Kubernetes is compared with Docker Swarm. They both are direct competitors when it comes to their functionality.

| Key Points | Docker Swarm | Kubernetes |

|---|---|---|

| Launching year | 2013 | 2014 |

| Created by | Docker Inc. | |

| Installation & cluster configuration | Easy installation. Setting up the cluster is challenging and complicated. | Little bit complicated installation. But the cluster setup is simple. |

| Data volume | Allows running containers within a single Pod to leverage the same set of storage volumes. | Uses the same storage space as any other container |

| Auto-scaling | Can not perform | Can perform |

| Load balancing | Automatic process | Manual process |

| Scalability | It’s easier to scale up than Kubernetes. Yet, it does not have the same substantial cluster power. | Contrary to docker, scaling up is cumbersome. Yet, this ensures a more robust cluster environment. |

| Assistance with a monitoring and logging program | Authorizes the usage of a third-party program, such as ELK. | It has a built-in monitoring and logging system. |

| Updates | Agent updates can be implemented on-site. | In-site upgrades to a cluster are possible. |

| Tolerance index | A lot of room for error. | Not much room for error. |

| Container limit | There is a limit of 95,000 containers. | Capped at a maximum of 300,000 containers. |

| Optimized for | Tailored specifically for use in a single, massive cluster. | Designed to work well with a wide variety of smaller clusters. |

| Node support | Allows for 2000+ concurrent connections. | The maximum number of nodes that may be supported is 5000. |

| Compatibility | Less comprehensive and more malleable. | Enhanced in scope and adaptability. |

| Large clusters | Consideration is given to speed for the robust cluster forms. | Provides the ability to deploy and scale containers, even across massive clusters, without sacrificing performance. |

| Community | Active developer community which regularly updates the software. | Has extensive backing from the open source community and major corporations like Google, Amazon, Microsoft, and IBM. |

| Companies using | Spotify, Pinterest, eBay, Twitter, etc. | 9GAG, Intuit, Buffer, Evernote, etc. |

6. Use Cases of Docker

Let’s have a look into use cases of Docker as compare to Kubernetes.

6.1 Reduce IT/Infrastructure Costs

When working with virtual devices, a full copy of the guest OS must be made. Fortunately, Docker doesn’t have this problem. While using Docker, you can run more applications with less infrastructure, and resource use can be optimized more effectively.

For instance, to save money on data storage, development teams might centralize their operations on a single server. Docker’s great scalability also lets you deploy resources as needed and dynamically grow the underlying infrastructure to meet fluctuating demands.

Simply said, Docker increases production, which implies you may save money by not having to hire dedicated developers as you would in a more conventional setting.

6.2 Multi-Environment Standardization

Docker gives everyone in the pipeline access to the same settings as those in production. Think of a software development team that is changing over time. Each member of the team is responsible for updating and installing the operating system, databases, node, yarn, etc. for the newest team member. Just getting the machines set up might take a few days.

For instance, you will require to acquire two variants of a library if you utilize multiple variations in multiple apps. In particular, before running these scripts, you should set any necessary custom environment variables. So, what happens if you make some last-minute modifications to dependencies in production but neglect to do it in production?

Docker creates a container with all the necessary tools and guarantees that there will be no clashes between them. In addition, you may keep tabs on previously unnoticed environmental disruptors. Throughout the CI/CD process, Docker ensures that containers perform consistently by standardizing the environment.

6.3 Speed Up Your CI/CD Pipeline Deployments

Containers, being much more compact and more lightweight than monolithic apps, may be activated in a matter of seconds. Containers in CI/CD pipelines allow for lightning-fast code deployment and easy, rapid modifications to codebases and libraries.

However, keep in mind that prolonged build durations might impede CI/CD rollouts. This arises because each time the CI/CD pipeline is run, it must pull all of its dependencies from fresh. Docker’s built-in cache layer, however, makes it simple to work around this build problem. Nevertheless, it is inaccessible from remote runner computers as it only functions on local workstations.

6.4 Isolated App Infrastructure

Docker’s isolated application architecture is a major benefit. You may forget about dependency problems because all prerequisites are included in the container’s deployment. Several applications of varying operating systems, platforms, and versions can be deployed to and run simultaneously on a single or numerous computers. Imagine two servers running incompatible versions of the same program. When these servers are operated in their own containers, dependency problems may be avoided.

Docker also provides an SSH server per container, which may be used for automation and troubleshooting. As each provider runs in its own separate container, it’s simple to track everything happening within that container and spot any problems right away. As a consequence, you may run an immutable infrastructure and reduce the frequency of infrastructure-related breakdowns.

6.5 Multi-Cloud or Hybrid Cloud Applications

Because of Docker’s portability, containers may be moved from one server or cloud to another with minimum reconfiguration.

Teams utilizing multi-cloud or hybrid cloud infrastructures may release their applications to any cloud or hybrid cloud environment by packaging them once leveraging containers. They can also quickly relocate apps across clouds or back onto a company’s own servers.

6.6 Microservices-Based Apps

Docker containers are ideally suited for microservices-architected applications. Because of orchestration technologies like Docker Swarm and Kubernetes, developers may launch each microservice in its own container and then combine the containers to construct an entire application.

Theoretically, you can deploy microservices within VMs or bare-metal servers as well. Nevertheless, containers are more suited to microservices apps due to their minimal resource usage and rapid start times, which allow individual microservices to be deployed and modified independently.

More Interesting Read: Microservices Best Practices

7. Use Cases of Kubernetes

Here are some detailed use cases of Kubernetes where it played a good role.

7.1 Container Orchestration

Orchestration of containers streamlines their rollout, administration, scalability, and networking. Orchestration of containers may be employed in any setting wherein containers are deployed. The same application may be deployed to several settings with this method, saving you time and effort in the designing process. Storage, networking, and protection may all be more easily orchestrated when microservices are deployed in containers.

To launch and control containerized operations, Kubernetes is the go-to container orchestration technology. Kubernetes’s straightforward configuration syntax makes it suitable for managing containerized apps throughout host clusters.

7.2 Large Scale App Development

Kubernetes is capable of managing huge applications because to its declarative setup and automation features. Developers can build up the system with fewer interruptions thanks to functions like horizontal pod scalability and load balancing. Kubernetes keeps things operating smoothly even when unexpected events occur, such spikes in traffic or broken hardware.

Establishing the environment, including IPs, networks, and tools is a difficulty for developers working on large-scale apps. To address this issue, several platforms have begun using Kubernetes, including Glimpse.

Cluster monitoring in Glimpse is handled by a combination of Kubernetes and cloud services including Kube Prometheus Stack, Tiller, and EFK Stack.

7.3 Hybrid and Multi-Cloud Deployments

Several cloud solutions can be integrated during development thanks to hybrid and multi-cloud infrastructure architectures. By utilizing many clouds, businesses may lessen their reliance on any one provider and boost their overall flexibility and application performance.

Application mobility is made easier with Kubernetes in hybrid and multi-cloud setups. Because it works in every setting, it doesn’t require any apps to be written specifically for any one platform.

Services, ingress controllers, and volumes are all Kubernetes ideas that help to hide the underlying system. Kubernetes is an excellent answer to the problem of scalability in a multi-cloud setting because of its in-built auto-healing and fault tolerance.

7.4 CI/CD Software Development

The software development process may be automated with the help of Continuous Integration and Continuous Delivery. Automation and rapid iteration are at the heart of the principles around which CI/CD pipelines are built.

The process of CI/CD pipelines involves DevOps technologies. Together with the Jenkins automation server and Docker, Kubernetes has become widely used as the container orchestrator of preference.

The pipeline will be able to make use of Kubernetes’ automation and resources control features, among others, if a CI/CD process is established.

7.5 Lift and Shift from Servers to Cloud

This is a regular phenomenon in the modern world, as more and more programs move from local servers to the cloud. In a traditional data center, we get an application running on physical servers. Because of its impracticality or expense, it has been chosen to host it in the cloud, either on a Virtual Machine or in large pods within Kubernetes. Moving it to large K8s pods isn’t a cloud-native strategy, but it can serve as a temporary solution.

To begin, a large application running on-premises is migrated to a similar application running in Kubernetes. After that, it’s broken down into its constituent parts and becomes a standard cloud native program. “Lift and shift” describes this approach, and it’s a perfect example of where Kubernetes shines.

7.6 Cloud-Native Network Functions (CNF)

Large telecommunications firms encountered an issue a few years ago. Its network services relied on components from specialist hardware vendors, such firewalls and load balancers. Naturally, this made them reliant on the hardware suppliers and limited their maneuverability. Operators were tasked with upgrading their current devices to provide new features. When a firmware upgrade wasn’t an option, new hardware was required.

To combat this shortcoming, telecommunications companies have decided to use network function virtualization(NFV) using Virtual Machines and OpenStack.

8. Using Kubernetes with Docker

When you use Kubernetes with Docker, the former assumes the role of an orchestrator of the Docker containers. So, now Kubernetes can create, handle, and schedule Docker containers to run on certain nodes in the cluster.

As per the requirements, Kubernetes can also scale up or down the number of containers automatically. The networking and storage of Docker containers are also handled by Kubernetes. This makes it easy for developers to build and launch complex containerized apps.

Using Kubernetes with Docker gives you an opportunity to reap the benefits of both tools. On one hand, you have Docker which can enable you to easily create and package containerized apps. On the other hand, you have Kubernetes offering a robust platform that you can leverage to manage and scale those apps. When used together, these tools work like an ultimate solution to handle containerized apps at scale.

9. Kubernetes vs Docker: The Conclusion

Despite their differences, Kubernetes and Docker are a formidable pair. Docker is the containerization element, allowing programmers to quickly and easily run containers and apps in their own contained environments using the command line. This allows developers to deploy their code throughout their infrastructure without worrying about incompatibilities. As long as the program can be tested on a single node, it should be able to run on any number of nodes in production.

Kubernetes orchestrates Docker containers, launching them remotely within IT settings and arranging them for when they need spikes. Kubernetes is a container orchestration tool that also facilitates load balancing, self-healing, and robotic deployments and rollbacks. Also, it is quite user-friendly due to its graphical user interface.

However, Kubernetes might be a good option for firms that plan to scale their infrastructure down the road. For those already familiar with Docker, Kubernetes simplifies the transition to scale by reusing current containers and workloads.

FAQs:

Can Kubernetes replace Docker?

As long as you have a container run time, Kubernetes can run perfectly and you won’t need Docker. However, it depends on what container runtime you have to orchestrate. So, if you don’t have Docker in the first place, Kubernetes won’t have any containers to orchestrate.

How Docker and Kubernetes work together?

Yes. When used together, they work as a complete solution. While Docker helps you create containers, Kubernetes helps to manage them at run time. You will have Docker to package and ship the app and Kubernetes for deploying and scaling it.

What is Kubernetes used for?

Kubernetes is used to automate all the operational tasks of managing containers. It can deploy the app, scale it, and make changes to fulfill the needs, monitor the apps, and make it easier to manage them.

What is Docker used for?

With the help of Docker we can quickly build, test and deploy the applications. It allows you to package the entire software into small standardized units called containers. From code and libraries to system tools and runtime, the containers possess everything software needs to run.

Vishal Shah

Vishal Shah has an extensive understanding of multiple application development frameworks and holds an upper hand with newer trends in order to strive and thrive in the dynamic market. He has nurtured his managerial growth in both technical and business aspects and gives his expertise through his blog posts.

Subscribe to our Newsletter

Signup for our newsletter and join 2700+ global business executives and technology experts to receive handpicked industry insights and latest news

Build your Team

Want to Hire Skilled Developers?

I must say both these open-source platforms Kubernetes and docker are very debatable. Here in this article, the author mentioned good points that make it easy to decide which one to choose and why.