Related Blogs

Table of Content

With the increasing use of containers for application deployment, Kubernetes, one of the best portable, open-source, and container-orchestration platforms, has become very popular for deploying and managing containerized applications.

The main reason behind it is that early on, companies ran applications on physical servers and had no defined resource boundaries for the same. For instance, if there are multiple apps that run on a physical server, there are chances that one app takes up the majority of the resources and others start underperforming due to the fewer resources available. For this, containers are a good way to bundle the apps and run them independently. And to manage the containers, Kubernetes is majorly used by Cloud and DevOps Companies. It restarts or replaces the failed containers which means that no application can directly affect the other applications.

Basically, Kubernetes offers a framework that runs distributed systems and deployment patterns. To know more about Kubernetes and key Kubernetes challenges, let’s go through this blog.

1. Kubernetes: What It can Do & Why Do You Need It?

Traditionally, companies used to deploy applications on physical servers which had no specific boundaries for resources and this led to an issue in which the performance of apps might suffer because of other apps’ resource consumption.

To resolve this, bundling the apps and running them efficiently in containers – is a good way. This means that, in the production environment, the software development team needs to manage the containers that run the applications, and after that, the developers need to ensure that there is no downtime. For instance, if the container of the application goes down, another one needs to start. And handling this behavior can be really easy with Kubernetes. It offers a framework that can run distributed systems flexibly.

Features

— Francesco (@FrancescoCiull4) April 22, 2023

Kubernetes provides many great features

Examples:

– data config.

– automatic load balancing

– self-healing mechanisms

– easy management of secrets

It’s also extensible: you can use it with various tools and services.

Kubernetes platform takes care of the app failover & scaling, offers deployment patterns, and much more. Besides this, Kubernetes has the capability to provide:

| Area of Concern | How can Kubernetes be helpful? |

|---|---|

| Self-healing |

|

| Storage Orchestration |

|

| Automate Bin Packing |

|

| Automated Rollbacks and Rollouts |

|

| Service Discovery and Load Balancing |

|

2. Key Kubernetes Challenges Organizations Face and Their Solutions

As we all know, many organizations have started adopting the Kubernetes ecosystem and along with that they are facing many Kubernetes challenges. So, they need a robust change program that is – a shift in mindset and software development practices which comes with two important standpoints – the organization’s viewpoint and the development teams’ viewpoint. Let’s go through the key Kubernetes challenges and possible solutions:

2.1 Security

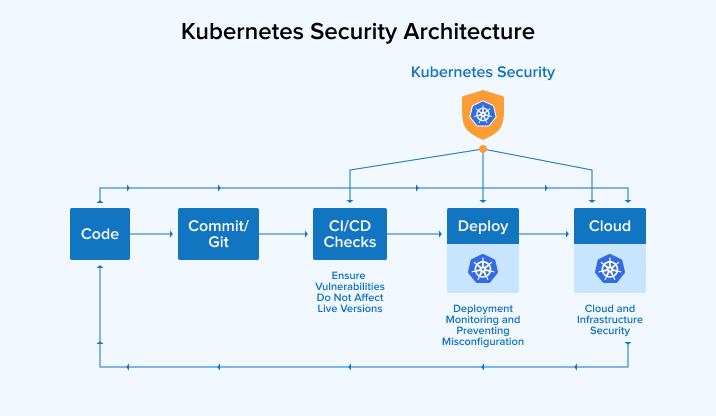

One of the biggest Kubernetes challenges users face while working with Kubernetes is security. The main reason behind it is the software’s vulnerability and complexity. This means that if the software is not properly monitored, it can obstruct vulnerability identification. Let’s look at where K8s best fit within the entire software development process for security:

Basically, when multiple containers are deployed, detecting vulnerabilities becomes difficult and this makes it easy for hackers to break into your system. One of the best examples of the Kubernetes break-in is the Tesla cryptojacking attack. In this attack, the hackers infiltrated the Kubernetes admin console of Tesla which led to the mining of cryptocurrencies on AWS, Tesla’s cloud resource. To avoid such security Kubernetes challenges, here are some recommendations you can follow:

Infrastructure Security

| Area of Concern | Recommendations |

|---|---|

| Network access to API Server |

|

| Network access to Nodes |

|

| Kubernetes access to cloud provider API |

|

| Access to the database (etcd) |

|

| Database encryption |

|

Containers Security

| Area of Concern | Recommendations |

|---|---|

| Container Vulnerability |

|

| Image signing |

|

| Disallow privileged users |

|

| Storage isolation |

|

| Individual Containers |

|

Other Recommendations

| Area of Concern | Recommendations |

|---|---|

| Use App Modules |

|

| Access Management |

|

| Pod Standards |

|

| Secure an Ingress with TLS |

|

2.2 Networking

Kubernetes networking allows admins to move workloads across public, private, and hybrid clouds. Kubernetes is mainly used to package the applications with proper infrastructure and deploy updated versions quickly. It also allows components to communicate with one another and with other apps as well.

Kubernetes networking platform is quite different from other networking platforms. It is based on a flat network structure that eliminates the need to map host ports to container ports. There are 4 key Kubernetes challenges that one should focus on:

- Pod to Pod Communication

- Pod to Service Communication

- External to Service Communication

- Highly-coupled container-to-container Communication

Besides this, there are some areas that might also face multi-tenancy and operational complexity issues, and they are:

- During the time of deployment, if the process spans more than one cloud infrastructure, using Kubernetes can make things complex. The same issue arises when the same thing happens from different architectures with mixed workloads.

- Complexity issues are faced by the users when mixed workloads from ports on Kubernetes and static IP addresses work together. For instance, when IP-based policies are implemented because pods make use of an infinite number of IPs, things get complex and challenging.

- One of the Kubernetes challenges with traditional networking approaches is multi-tenancy. This issue occurs when multiple workloads share resources. It also affects other workloads in the same Kubernetes environment if the allocation of resources is improper.

In these cases, the container network interface (CNI) is a plug-in that enables the programmer to solve the networking Kubernetes challenges. This interface enables Kubernetes to easily integrate into the infrastructure so that the users can effectively access applications on various platforms. This issue can be resolved using service mesh which is an infrastructure layer in an application that has the capability to handle network-based intercommunication through APIs. It also enables the developers to have an easy networking and deployment process.

Basically, these solutions can be useful to make container communication smooth, secure, seamless, and fast, resulting in a seamless container orchestration process. With this, the developers can also use delivery management platforms to carry out various activities like handling Kubernetes clusters and logs.

2.3 Interoperability

Interoperability can also be a serious issue with Kubernetes. When developers enable interoperable cloud-native applications on this platform, the communication between the applications starts getting tricky. It starts affecting the cluster deployment and the reason behind is that the application may have an issue in executing an individual node in the cluster.

This means Kubernetes doesn’t work as well at the production level as it does in development, staging, and QA. Besides this, when it comes to migrating an enterprise-class production environment, there can be many complexities like governance, interoperability, and performance. For this, there are some measures that can be taken into consideration and they are:

- For production problems, fuel interoperability.

- One can use the same command line, API, and user interface.

- Leveraging collaborative projects in different organizations to offer services for applications that run on cloud-native can be beneficial.

- With the help of Open Service Broker API, one can enable interoperable cloud-native applications and increase the portability between providers and offers.

2.4 Multi Cluster Headaches

Every developer or every team starts working with a single cluster in Kubernetes. However, many teams expect that the size or number of clusters will increase next year. And the number of clusters indeed increases with time.

The reason behind such a dramatic increase in Kubernetes clusters is that the teams break down the development, staging, and production environment clusters. Developers can handle working on 3-4 clusters by using various types of open-source tools like k9s, and more.

But the work becomes overwhelming even for a team when the number rises beyond two dozen clusters. You can definitely solve complex problems using multiple Kubernetes clusters but managing a high number of clusters is challenging. Even when each cluster grows, its complexities also increase which makes it even tougher to manage one cluster.

Every Kubernetes cluster is responsible for various operations like updating, maintaining, and securing the cluster, addition or removal of the node, and more. These operations are of a dynamic nature and when they scale, they require a well-arranged Kubernetes multi-cluster management system in place.

Such a system enables you to monitor each cluster and everything happening inside it. The operation team’s SREs can maintain the uniformity of your Kubernetes architecture and the performance of your application by monitoring the health of the cluster environment.

Multi-cluster management is the solution to multi-cluster headaches. Developers should use a declarative approach to explain the configuration rather than individually connecting with the clusters using open-source tools.

This description should determine the future state of the cluster which includes everything from app workloads to infrastructure. Providing such a detailed description helps recreate the entire cluster stack from the ground up whenever the need arises.

2.5 Storage

Storage is one of the key Kubernetes challenges that many organizations face. When larger organizations try to use Kubernetes on their on-premises servers, Kubernetes starts creating storage issues. The main reason behind it is that the companies are trying to manage the whole storage infrastructure of the business without using cloud resources. This leads to memory crises and vulnerabilities.

The permanent solution is to move the data to the cloud and reduce the storage from the local servers. Besides this, there are some other solutions for the Kubernetes storage issues and they are:

- Persistent Volumes:

- Stateful apps rely on data being persisted and retrieved to run properly. So, the data must be persisted regardless of pod, container, or node termination or crashes. For this, it requires persistent storage – storage that is forever for a pod, container, or node.

- If one runs a stateful application without storage, data is tied to the lifecycle of the node or pod. If the pod terminates or crashes, entire data can be lost.

- For solution, one requires these three simple storage rules to follow:

- Storage must be available from all pods, nodes, or containers in the Kubernetes Cluster.

- It should not depend upon the pod lifecycle.

- Storage should be available regardless of any crash or app failure.

- Ephemeral Storage:

- This type of storage refers to the volatile temporary data saving approach that is attached to the instances in their lifetime data such as buffers, swap volume, cache, session data, and so on.

- Basically, when containers can utilize a temporary filesystem (tmpfs) to write & read files, and when a container crashes, this tmpfs gets lost.

- This makes the container start again with a clean slate.

- Also multiple containers can not share the temporary filesystem.

- Some other Kubernetes challenges can be solved through:

- Decoupling pods from the storage

- Container Storage Interface (CSI)

- Static provisioning and dynamic provisioning with Amazon EKS and Amazon EFS

2.6 Cultural Change

Not every organization succeeds even after adopting new processes, and team structures, and implementing easy-to-use new software development technologies and tools. They also tend to constantly improve the portfolio of systems which might affect the work quality and time. Due to this reason, businesses must establish the right culture in the firm and come together to ensure that innovation comes at the intersection of business and technology.

Apart from this, companies should make sure that they start promoting autonomous teams that can have their own freedom to create their own work practices and internal goals. This helps them get motivated to create high-quality and reliable output.

Once they have a qualitative and reliable outcome, companies should focus on reducing uncertainties and creating confidence which is possible by encouraging experimentation and all these things benefit companies a lot. Any organization that is constantly active, trying new things and re-assessing is more suited to create an adaptive architecture that is easy to roll back and can be expandable without any risk.

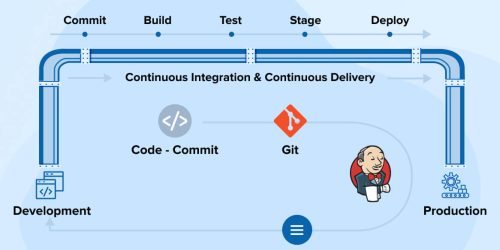

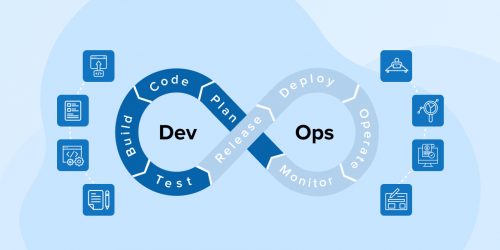

Automation is the most essential thing when it comes to evolutionary architecture. Adopting new architecture and handling the changes that the architecture has brought in an effective manner includes provisioning end-to-end infrastructure as code with the use of tools like Ansible and Terraform. Besides this, automation can also include adhering to software development lifecycle practices such as testable infrastructure with continuous integration, version control, and more. Basically, the companies that nurture such an environment, lean towards creating interactive cycles, safety nets, and space for exploration.

2.7 Scaling

Any business organization in the market aims to increase its scope of operations over a period of time. But not everyone gets successful in this desire as their infrastructure might be poorly equipped to scale. And this is a major disadvantage. In this case, if companies are using Kubernetes microservices, because of its complex nature, it generates a huge amount of data to be deployed, diagnosed, and fixed which can be a daunting task. Therefore, without the help of automation, scaling can be a difficult task. The main reason behind it is that for any company working with mission-critical apps or real-time apps, outages are very damaging to user experience and revenue. This also happens to companies offering customer-facing services through Kubernetes.

If such issues aren’t resolved then choosing an open-source container manager for running Kubernetes can be a solution as it helps in managing and scaling apps on multi-cloud environments or on-premises. These containers can handle functions like-

- Easy to scale clusters and pods.

- Infrastructure management across multiple clouds and clusters.

- User-friendly interface for deployment.

- Easy management of RBAC, workload, and project guidelines.

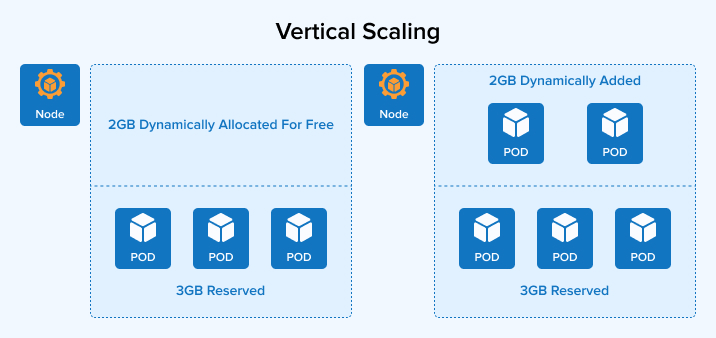

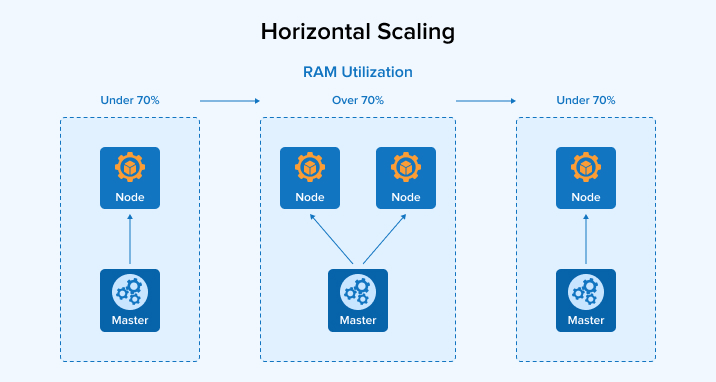

Scaling can be done Vertical as well as Horizontal.

- Suppose, an application requires roughly 3 GiB RAM, and there is not much going on the cluster, then you will require only one node. But for the safer side you can have a 5GiB node where 3 GiB node will be in use and the remaining 2 GiB in stash. So, when your application starts consuming more resources, you can immediately have those 2 GiB node available. And you start paying for those resources when in use. This is Vertical Scaling.

- K8s also supports horizontal auto-scaling. New nodes will be added to a cluster whenever CPU, RAM, Disk Storage, I/O reaches a certain usage limit. New nodes will be added as per the cluster topology. Let’s understand it from following image:

2.8 Deployment

Last challenge on our list is the deployment of containers.

What is a deployment in K8s?

— Francesco (@FrancescoCiull4) April 22, 2023

A K8s Deployment defines the desired state of a containerized app, managing:

– its rollout

– updates

– scaling

It ensures the correct number of replicas (multiple containers with the same image) and smooth transitions between versions. pic.twitter.com/Tft4T7raZ3

Companies sometimes face issues while deploying Kubernetes containers and these issues occur because Kubernetes deployment requires various manual scripts. This process becomes difficult when the development team has to perform various deployments using Kubernetes manual scripts. And this slows down the process. For such issues, some of the best Kubernetes practices are:

- Spinnaker pipeline for automated end-to-end deployment.

- Inbuilt deployment strategies.

- Constant verification of new artifacts.

- Fixing container image configuration issue.

- Kubernetes enables solving issues about fixing node availability.

Common Errors Related to Kubernetes Deployments and Their Possible Solutions:

| Common errors | Possible Solution |

|---|---|

| Invalid Container Image Configuration |

|

| Node availability issues |

|

| Container startup issues |

|

| Missing or misconfigured configuration issues |

|

| Persistent volume mount failures |

|

3. How have Big Organizations Benefited after Using Kubernetes?

Here are some of the instances that show that big organizations have benefited from Kubernetes:

| Organization | Challenge | Solution | Impact |

|---|---|---|---|

| Booking.com | After Booking.com migrated OpenShift to the platform, accessing infrastructure was easy for developers but because Kubernetes was abstracted, trying to scale support wasn’t easy for the team. | The team created its own Vanilla Kubernetes platform after using Openshift for a year and asked the developers to learn Kubernetes. | Previously, creating a new service used to take a few days but with containers, it can be created within 10 mins. |

| AppDirect | AppDirect, the end-to-end eCommerce platform was deployed on tomcat infrastructure where the process was complex because of various manual steps. So better infrastructure was needed. | Creating a solution that enables teams to deploy the services quicker was the perfect solution, so after trying various technologies, Kubernetes was the choice as it enabled the creation of 50 microservices and 15 Kubernetes clusters. | Kubernetes helped the team of engineers to have 10X growth and it also enabled the company to have a system with additional features that boost the velocity. |

| Babylon | Products of Babylon leveraged AI and ML but computing power in-house was difficult. Besides, the company also wanted to expand. | The best solution for this was to get apps integrated with Kubernetes and make the team get turned towards Kubeflow. | Teams were able to compute clinical validations within mins after using Kubernetes infrastructure and it also helped Babylon to enable cloud-native platforms in various countries. |

4. Conclusion

As seen in this blog, Kubernetes is an open-source container orchestration solution that helps businesses manage and scale their containers, clusters, nodes, and deployments. As this is a unique system, developers face many different Kubernetes challenges while working with its infrastructure and managing and scaling business processes between cloud providers. But once they master this tool, Kubernetes can be used in a way that offers an amazing, declarative, and simple model for programming complex deployments.

FAQs:

What is the biggest disadvantage of Kubernetes?

Understanding Kubernetes is very difficult. It takes time to learn how to implement Kubernetes effectively. Moreover, setting up and maintaining Kubernetes is complex, especially for small teams that have very limited resources.

What are the challenges of adopting Kubernetes?

Some of the biggest challenges you may face while adopting Kubernetes are network visibility and operability, cluster stability, security, and storage issues.

What makes Kubernetes difficult?

Google created Kubernetes to handle large clusters at scale. So, its architecture is highly distributed and built to scale. With microservices at the core of its architecture, Kubernetes becomes complex to use.

Mohit Savaliya

Mohit Savaliya is looking after operations at TatvaSoft and leverages his technical background to understand Microservices architecture. He showcases his technical expertise through bylines, collaborating with development teams and brings out the best trending topics in Cloud and DevOps.

Subscribe to our Newsletter

Signup for our newsletter and join 2700+ global business executives and technology experts to receive handpicked industry insights and latest news

Build your Team

Want to Hire Skilled Developers?

In this trending technical world Kubernetes is playing a great role. It also has its own challenges that we should know and clear our doubts. This article explained it in detail. Keep sharing!